The confidence gap in AI

AI has officially entered the customer service stack. Most leaders agree it’s no longer a question of if but how fast they can scale it. The potential is clear: faster resolutions, reduced costs, and always-on support.

But beneath the excitement, many leaders hesitate. Not because they doubt AI’s capabilities, but because they lack visibility, control, and, most critically, confidence.

At Kustomer, we’ve spent the last year speaking with CX and operations leaders. The same questions came up again and again:

“How do I know if this agent is really ready for customers?”

“How do I prove performance without slowing everything down?”

“What happens when an agent gets stuck once it’s live?”

These aren’t technical questions. They’re trust questions. And until leaders can answer them, AI projects stall in pilot mode.

Why trust falls short

Most organizations still test AI the old-fashioned way: a handful of sample scenarios, a spreadsheet, and a thumbs-up when things “look good.” That might work for deterministic software, where the same input always produces the same output, but it doesn’t work for generative AI.

Generative AI is probabilistic. It doesn’t copy-paste an answer. It generates a response based on probabilities. That means the same prompt can yield slightly different outputs from one run to the next. One test might pass by chance. Another might fail as an anomaly.

Without running tests at scale and analyzing patterns, there’s no way to know if an AI agent is truly reliable. Manual, one-off tests create a dangerous illusion of readiness. This is why so many pilots stall.

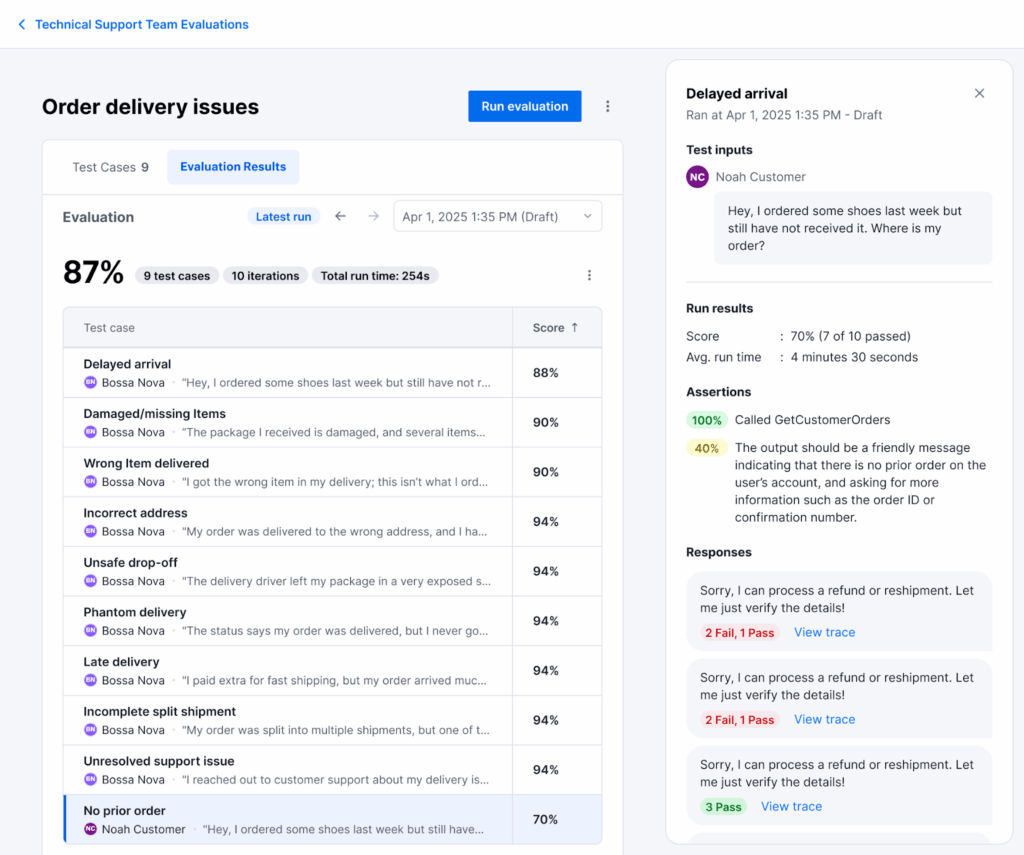

From guesswork to evidence: AI Agent Evaluations

AI Agent Evaluations transform this process from subjective guesswork into enterprise-grade validation. Instead of testing once and hoping for the best, leaders and their teams can now define benchmarks that align to business priorities, like measurable accuracy, tool usage, or knowledge base references. They can run tests at scale, not once but dozens or even hundreds of times per scenario, to capture variability and ensure results are statistically meaningful. And they can rely on automated grading, with every run scored against the defined benchmarks, instantly highlighting where agents meet standards and where they fall short.

This means readiness is no longer a matter of opinion. Leaders can see proof — in data, not anecdotes — that their agents are ready for customers. When testing is automated and repeatable, teams move with both speed and confidence. Early users of AI Agent Evaluations discovered that the manual test they used to run, which took 2-3 minutes per test, now takes less than 20 seconds. For administrators who want to run hundreds of tests thoroughly, this new feature will save them hours. Plus, they can set the tests to automatically run while they do other work.

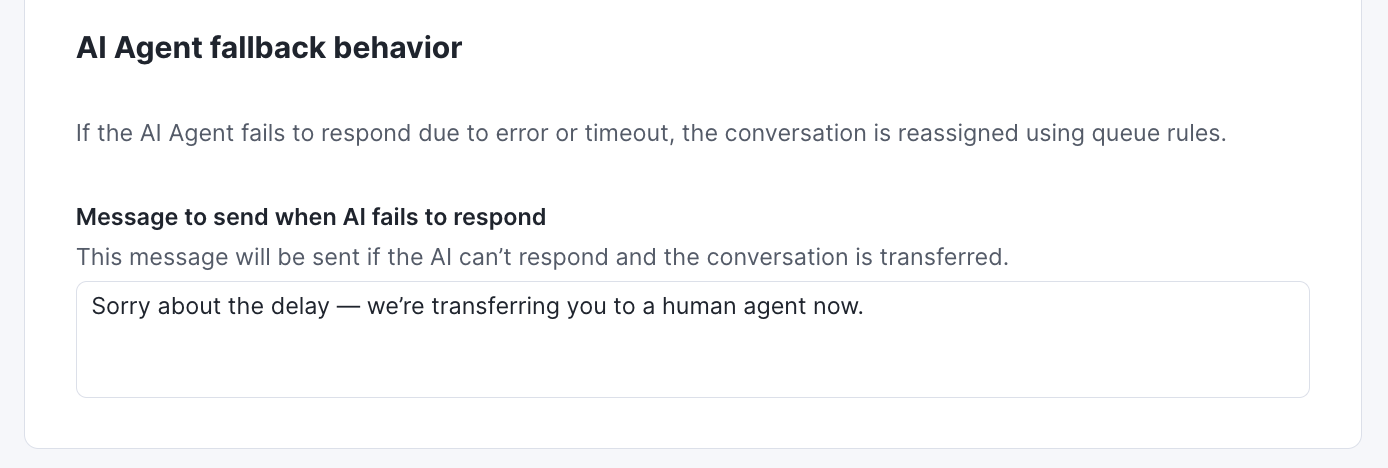

AI Conversation Safeguards that protect customers and brands

Even the best-tested AI agents will encounter surprises in production. The AI Agent might stall. Open AI might experience an outage. A conversation might veer into an edge case no one predicted.

Without controls, those moments become dead ends that frustrate customers and force staff to step in manually.

AI Conversation Safeguards prevent this. They monitor every interaction in real time, detect when progress stops, and automatically take corrective action — escalating to a human, rerouting to a queue, or closing the case gracefully. Later this fall, AI Conversation Safeguards will also account for when consumers abandon conversations. When this happens, the open conversation will automatically be transferred or closed based on a defined period of time.

For leaders, this means every conversation reaches a resolution. For customers, it means no more getting stuck in limbo. And for teams, it means fewer manual interventions and more time spent on higher-value work. Safeguards turn worst-case scenarios into managed outcomes, protecting both the customer experience and the brand.

Governance that builds trust in AI

For years, AI in CX was judged on speed and accuracy. But the next frontier is trust. Leaders are asking: Can I rely on this system to perform at scale, without constant oversight? The companies that treat AI governance as a first-class priority will be the ones that answer yes. They’ll be the ones who move faster, scale more effectively, and protect brand trust along the way.

Kustomer AI builds governance into the foundation with AI Agent Evaluations for automated, repeatable testing and statistical proof of readiness. AI Conversation Safeguards ensure no customer is ever left in a dead end, and provide visibility and reporting so leaders know not just what’s working, but where to improve.

“With generative AI, it’s an entirely different game. The strength of AI Agents lies in their advanced reasoning, but that also means they can produce different variations of a response to the same question. This requires us to rethink how we test and deploy, with validation at scale before launch and safeguards in production, to ensure every customer conversation stays on track.”

Jeremy Suriel, Co-Founder & CTO, Kustomer

What’s Next?

The story of AI in customer service is no longer about possibility. It’s about precision. It’s about moving past pilots with confidence, backed by evidence, not faith. Launching AI is easy. Launching it with confidence is the real unlock.

AI Agent Evaluations and AI Conversation Safeguards are just the beginning of our push to give you confidence in our AI. Next up: Reasoning Path Analytics. This new capability will give leaders full visibility into AI agent decision paths, eliminating guesswork from automation. With it, admins can quickly diagnose misrouting, identify bottlenecks, and refine instructions in minutes instead of hours.

Both AI Agent Evaluations and AI Conversation Safeguards are now available in AI Agents for Customers at no additional cost. If you’re interested in learning a bit more about AI Agents for Customers, book a demo.